Future-proofing your AI investment in data extraction and document processing; taking a build, buy or partner approach

To succeed today, businesses must find innovative ways to optimize the value of a vital commodity: their data. With more information available than ever before, organizations are sitting on a goldmine of data to help guide their strategic and tactical decision-making. However, analyzing the sheer volume of data available can be both challenging and costly. This is especially true given that an estimated 80% of all organizational data is unstructured, requiring extensive processing before it can be used. Thankfully, technological advancements in the areas of AI, NLP and ML, have enabled companies to harvest data, make it usable, and analyze it in an easier, more cost-effective way. These advancements in unstructured data processing can help to remove operational bottlenecks, reduce costs, support or replace legacy systems, and unite cross-functional team efforts around data access and management.

Unlocking the value of your organizational data does still require investment, but several options are now available. Some companies choose to build data processing infrastructure in-house, which requires money, time and human effort. Plus, it can mean diverting resources from other projects, often for long periods of time. Alternatively, some decide to buy a specialist solution. This option requires research to ensure the solution chosen meets your specific requirements and comes with licensing costs, but it requires less time and resources than building a solution in-house. Another choice is to partner with a provider who already has a platform on which you can fast-track the build of your own solutions. Careful consideration is crucial for those investing in data extraction and document processing solutions. Strategies set before the pandemic to build, buy or partner, should be revisited as pain points and user needs have shifted, and working and operating models have changed.

Many businesses are also dealing with market volatility, increased competition and ongoing regulatory and compliance needs. This has required many companies to pivot on previous plans, putting additional pressure on their developers and IT teams. AI is also advancing rapidly. Those data science teams that take a research-led approach to developing their solutions can reap the benefits in terms of continually improving the functionality, accuracy and scalability of their tools. Whether you choose to build, buy or partner, you should ensure your document processing and data extraction solutions are flexible enough to accommodate change requests, can meet stringent data security requirements and provide the end-users with the accurate, precise and up-to-date data they need to make your business successful. Below, we look at why these three factors are especially important for projects of this nature.

Flexibility matters

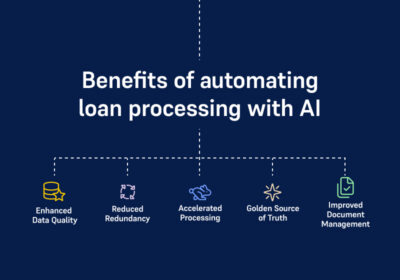

The more quickly a company can digitize their data extraction and document processing activities, the sooner revenue or savings can be recognized from these efforts. Implementing a solution quickly and effectively with minimal disruption is key. But so is maintaining those tools and ensuring they are flexible enough to meet your company’s changing needs. The data science tools you choose must keep pace with internal initiatives and external forces that will impact data requirements. Can the solution accommodate all the necessary document types and unstructured data sources? Can it be customized for different use cases and functions? Will it be able to scale as your business grows and evolves? Will it cope with changes to workflows and processes? Does it require extensive training for end-users to use effectively? Does it integrate with all your existing repositories and databases?

One of the great things about deep tech is that’s it’s always improving and evolving. But keeping up with the fast pace and successfully productionizing those improvements is also one of the biggest challenges. Often, the more data teams have to work with the more additional data they’ll want to access, explore and drill down on. As such, you can expect your business needs to grow and the questions to become more granular. Finding a flexible data solution that can grow with your business is critical.

Data security

Needless to say, data security matters. Your organization’s data is valuable and should be protected, handled and stored securely. Breaches are increasingly common and the risks of data getting into the wrong hands need to be taken seriously. Any data solution you choose should meet or exceed information security standards and continue to do so as regulations change or you move into markets with different regulatory requirements. Whether hosted on-premise or in the cloud, you should feel confident that your data is secure from all outside threats.

The right solution can also protect your organization against the loss of data expertise by systematizing it. By building your machine learning models based on the data requirements of your internal subject matter experts you capture that intelligence and retain it should those individuals move on. Ideally, your data solution will enable you to establish a comprehensive, secure ‘golden source of truth’ data lake that helps your business to achieve its goals.

Data accuracy

If your data extraction and document processing solution doesn’t deliver on accuracy, then you’ve not delivered a solution – you've simply automated a problem. Bad data leads to bad decisions and will ultimately cost your business in one way or another. Generally speaking, custom-built targeted machine learning models outperform pre-built generic models when it comes to accuracy as they’ve been purpose made and trained to handle the specific documents and data requests users are throwing at them. Rules-based solutions will also struggle to achieve highly accurate results unless you have dedicated engineering support to maintain them in production.

A solid approach to model risk management is imperative to achieving accurate data results. Model performance evaluation methods, such as using cross-validation, should be implemented to provide a range of metrics. These metrics highlight different aspects of the model performance, such as precision, recall etc. Confidence flags, plugin validators and a manual remediation workflow will ensure high-quality accurate results. For those use cases that require near-total precision, a human-in-the-loop approach can get you to 99.5% data accuracy with less than 10% of answers requiring human review and intervention.

Companies that successfully productionize solutions that enable them to unlock the full value of their data will reap the benefits for years to come. Solutions that can consistently, securely and accurately process information, while retaining the ability to flex with company goals and industry changes will stand the test of time.

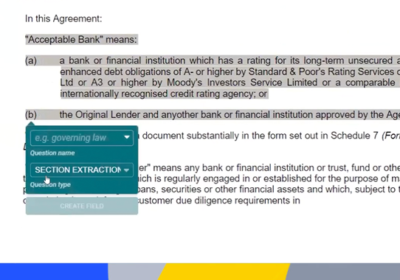

The Eigen intelligent document processing platform uses NLP to understand context and accurately identify words, short phrases or sections of text then extract that specific data. Model risk management is a core Eigen principle and in competitive benchmarking exercises, we routinely deliver better accuracy on fewer training documents. We provide industry-leading security by design including an ISO-accredited cloud offering to fully protect your organizational data.

Our extensible APIs and plugin architecture connect the platform to your document repositories and downstream systems to enable the straight-through processing of data. This flexibility allows you to transform workflows and optimize data flow across your organization from document ingestion and normalisation through to manual remediation with a full audit trail and a user-friendly interface.

Let us help you to supercharge your organization’s use of unstructured data. Get in touch to request a demo or speak to one of our solution consultants about your requirements.

-

World Economic forum 2020

-

Gartner Cool Vendor 2020

-

AI 100 2021

-

Lazard T100

-

FT Intelligent Business 2019

-

FT Intelligent Business 2020

-

CogX Awards 2019

-

CogX Awards 2021

-

Ai BreakThrough Award 2022

-

CogX Awards Best AI Product in Insurance

-

FStech 2023 awards shortlisted

-

ISO27001

-

ISO22301

-

ISO27701

-

ISO27017

-

ISO27018