Large Language Models (Part 1 of 3): How can Intelligent Document Processing Leverage the ChatGPT Revolution?

Blog Part 1: Building a Fighter Jet: How do Large Language Models fit into IDP?

By Dr Lewis Z Liu – Co-founder and CEO at Eigen Technologies

It has been impossible to avoid the hype and talk about ChatGPT, with new articles and stories breaking every day since it launched in November 2022. While we feel no need switch our AI stack exclusively to GPT-X itself, we do think it's important to delve into the topic of large language models - the type used to power the latest wave of generative AI solutions like ChatGPT – so we can explain their relevancy and application within our specialist field of intelligent document processing. In this blog series, we explore large language models (LLMs for short) and their potential applications within the world of intelligent document processing (IDP) as well as the challenges and risks.

What are GPT-4, Chat-GPT, BERT and Large Language Models?

Given the topic, what better way to explain this than have ChatGPT write the introduction:

“In recent years, there has been a rapid development in the field of artificial intelligence and machine learning, leading to the creation of advanced large language models (LLMs) such as GPT-4, Chat-GPT, BERT, and others. These models are designed to process and generate natural language, allowing them to perform tasks such as language translation, sentiment analysis, and even creative writing. As the technology behind these language models continues to improve, they are becoming increasingly prevalent in various industries, from marketing and advertising to healthcare and finance. In this blog post, we will delve into the world of large language models, exploring what they are, how they work, and their potential applications.”

This article by Stephen Wolfram also offers a good semi-technical explanation of Chat-GPT.

How do Large Language Models (LLMs) fit into Intelligent Document Processing (IDP)?

Imagine building a fighter jet. You need to bring together a ton of different components from structural pieces like wings and the fuselage, to more complex components like the engines or avionics. A working fighter jet is only complete when all these pieces come together.

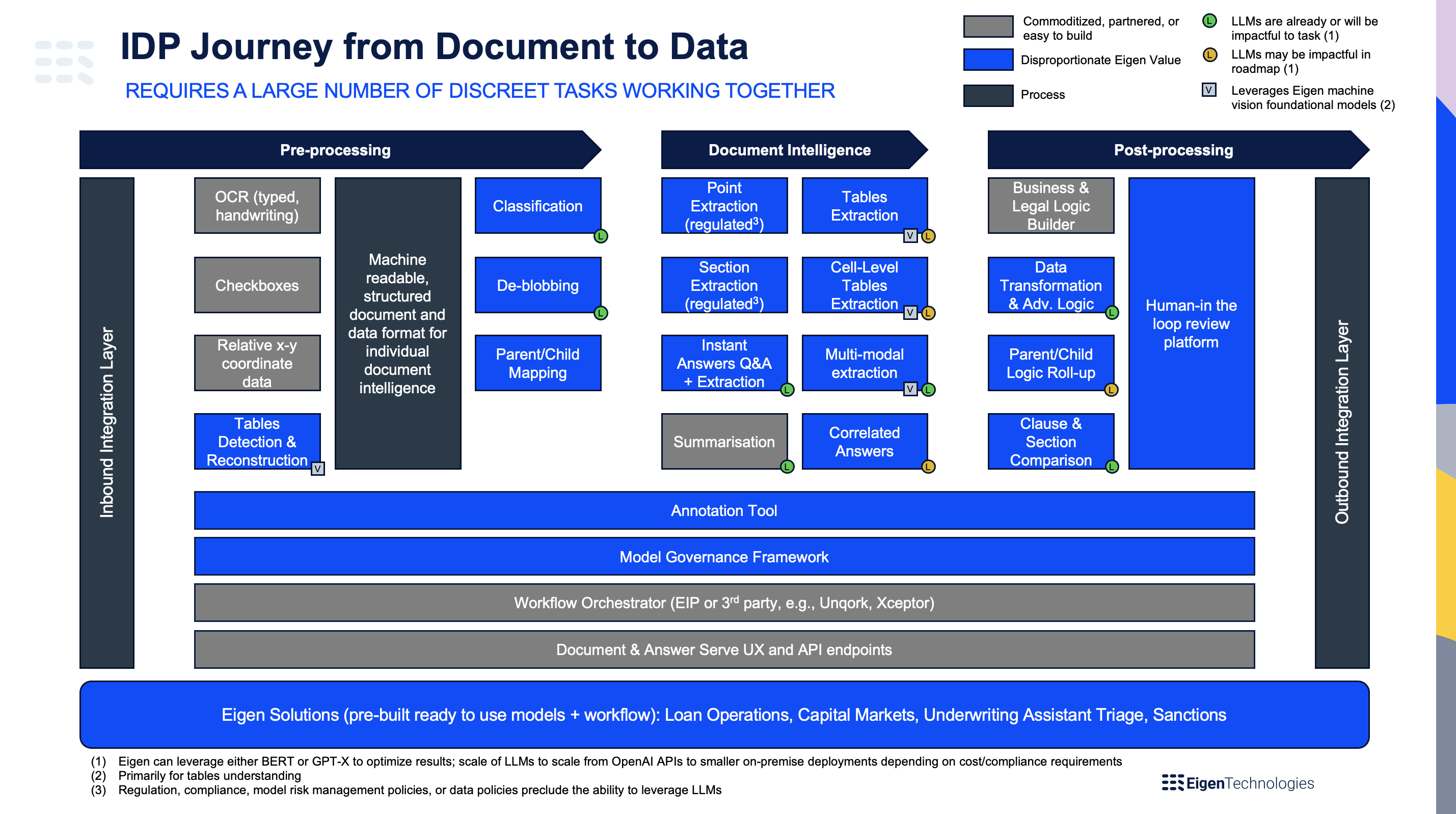

Building an IDP platform is similar. There is an entire infrastructure and workflow pipeline that is analogous to the fuselage and wings. There’s a need for model training and model governance just like avionics. And of course, you have the actual interpretive AI models themselves that are the engine that powers your machine. Diagram 2 below, shows all the components that make up the Eigen IDP platform.

To further complicate matters, in the world of IDP, it is not simply a case of querying the text strings within a document. There are additional complications, such as the visual language of the documents to be processed. These can take the form of tables (a huge problem), checkboxes, or graphs. This introduces the problem that AI model(s) need to be multi-modal – marrying natural language processing (NLP) (such as GPT-X) with machine vision.

So, what does this mean in the context of GPT-4? In the fighter jet analogy, it means that we can swap out one core component of the engine for another component to enable the jet to fly faster and be more manoeuverable under certain conditions. Reverting back to IDP, this means that GPT-4 can help extract and interpret certain questions in certain (mostly text-only) documents better than previous models. In the world of generative AI and chat, GPT-4/ChatGPT is revolutionary, but in the world of IDP, it is essentially ‘just’ an additional tool to be “potentially” swapped out under certain conditions. This is not meant to downplay the potential impact. Back to the fighter jet analogy, this could mean a cruise speed of Mach 1.5 vs an older version of Mach 1. While it doesn’t fundamentally change the rest of the plane, it is significantly more performant for certain tasks. Similarly, in the IDP world, a bigger, more powerful LLM (like GPT-4) can mean better data extraction accuracy and flexibility and the ability to deal with more complex tasks that were previously more rules-based. While LLMs cannot make an impact everywhere in the IDP data flow, more powerful LLMs like GPT-4 may open up the ability to do new tasks previously thought impossible like ‘chatting with your documents live’, an exciting functionality we plan to release in Q2.

In the next blog in this series we will look in greater detail at the opportunities and applications of LLMs and GPT-X in the IDP technology stack.

Get in touch to find out more about Eigen's intelligent document processing capabilities or request a platform demo.

-

World Economic forum 2020

-

Gartner Cool Vendor 2020

-

AI 100 2021

-

Lazard T100

-

FT Intelligent Business 2019

-

FT Intelligent Business 2020

-

CogX Awards 2019

-

CogX Awards 2021

-

Ai BreakThrough Award 2022

-

CogX Awards Best AI Product in Insurance

-

FStech 2023 awards shortlisted

-

ISO27001

-

ISO22301

-

ISO27701

-

ISO27017

-

ISO27018