Hype or game-changer? What does AI really mean for the insurance industry? The experts’ opinion.

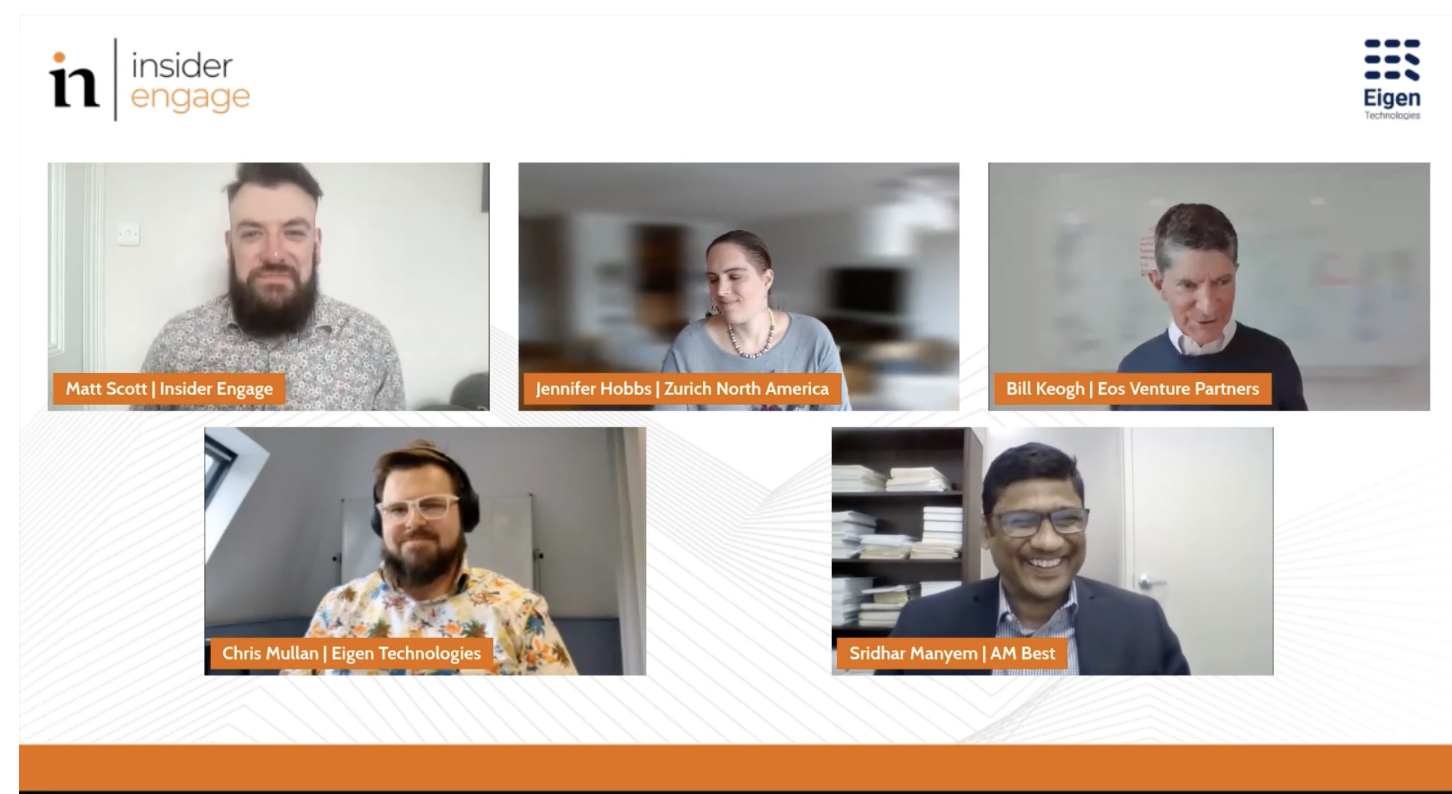

This May, in partnership with Insider Engage, we hosted a webinar with a panel of insurance industry and technology experts to demystify the topic of AI and how the latest wave of Generative AI tools like ChatGPT can provide operational value.

The panel comprised of Jennifer Hobbs, VP, Lead Data Scientist for Zurich North America; Bill Keogh, Operating Partner, Eos Venture Partners; Sridhar Manyem, Senior Director, Industry Research and Analytics, at AM Best; and our SVP of Product, Chris Mullan with Matt Scott of Insider Engage as moderator.

With a record number of webinar registrants, attendees and questions for the panel from the audience, the demand for information and content on this topic was clear, and the panel quickly got to work explaining what large language models (like GPT-4 that powers ChatGPT) are and the types of insurance use cases and operational pain points they can solve. The panel also ran through the challenges, risks and privacy concerns that accompany them.

The webinar was recorded, and you can (re)watch it here.

With so many questions submitted from the audience, the panel were unable to answer them all during the one-hour session, so we’ve answered the three most frequently posed questions below.

What people want to know about AI and LLMs in insurance

Here are the answers to the top 3 questions posed by attendees during the LLM and AI in Insurance webinar.

Q1: How can LLMs be used for claims fraud detection?

A1: Whilst there are lots of useful applications of AI in claim fraud detection, as yet there is no clear use case here for LLMs. LLMs are generative models, their purpose is to create written content from a given prompt, whereas the type of problem in claims fraud is better suited to discriminator/prediction types of models. There are lots of useful AI tools in the space, from voice analytics to image/document tampering, but nothing as yet.

There will be the upcoming challenge of increased fraud attempts using generative tools however, using some kind of generative image/document tool to create faked documents that support fraudulent claims and we don’t yet have the tools today to detect this kind of thing reliably. For example, GPT-0, a tool designed to detect the use of GPT-X, is still fairly inaccurate in its determinations.

Q2: Will regulating AI stop people from innovating within insurance?

A2: No, regulation won’t stop all innovation, but it does place some limits on the places and ways in which it can be applied, which for now isn’t much of a problem given the scope of the untapped possibilities that still exist across insurance.

The regulations today demand that key risk-taking decision and customer outcomes are explainable, and that’s a challenge for run of the mill Neutral Networks, let alone something as complex as GPT-4. While exact training and architecture details have not been made available, it is safe to assume it is levels of magnitude beyond its predecessor GPT-3, itself substantial including 175b parameters.

There is still plenty to be done around automation, risk selection, analytics, claims fraud, and product innovation, all in ways that aren’t blocked by regulation.

Q3: Will future LLMs be powerful and accurate enough to replace underwriters?

A3: Not in our opinion. LLMs are like the world’s most powerful predictive text, able to predict not one or two words ahead, but entire pages of text. Their power comes from the sheer volume of training data used to teach them the patterns of human writing, and from the size of the “tokens” that the text is broken into.

They are great at generating code, passages of human sounding text, and the like, but they don’t have actual knowledge, they can’t make actual decisions.

We see a place where an LLM built with insurance in mind, with the kind of guardrails necessary to protect against hallucinations, and with the auditability demanded in regulated industries, becomes a useful tool to help all kinds of decision-makers interrogate submissions or create risk summaries and the like, but that’s a little ways off right now.

Thank you to all the panelists for the informed discussion.

How can AI help your underwriters today? Eigen’s Underwriter Assistant solution uses AI effectively and safely to support underwriters with time-consuming document review and data gathering/analysis processes so they can focus on the risks and opportunities that matter most. Click here to learn more about Underwriter Assistant.

-

World Economic forum 2020

-

Gartner Cool Vendor 2020

-

AI 100 2021

-

Lazard T100

-

FT Intelligent Business 2019

-

FT Intelligent Business 2020

-

CogX Awards 2019

-

CogX Awards 2021

-

Ai BreakThrough Award 2022

-

CogX Awards Best AI Product in Insurance

-

FStech 2023 awards shortlisted

-

ISO27001

-

ISO22301

-

ISO27701

-

ISO27017

-

ISO27018