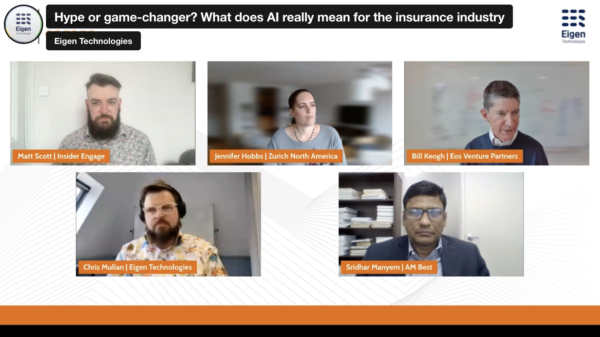

Webinar write-up: What does AI really mean for the insurance industry? Is it hype or a game-changer?

In May, we hosted a webinar in partnership with Insider Engage on the use and value of generative AI and large language models (LLMs for short) within the insurance industry. Matt Scott of Insider Engage moderated the discussion and has also written a write-up of the webinar for us, which you can read below.

You can find the original version of this article published here on the Insider Engage website.

Want to tune into the webinar? You can watch it on-demand here, or catch the webinar highlights here.

Is AI really set to revolutionise the insurance industry?

By Matt Scott of Insider Engage

Over the last few months, it seems everyone has been talking about large language models – or LLMs – and how they are set to revolutionise the way insurance companies operate. But what do these models mean for the insurance industry? And do they really live up to the hype?

That’s exactly what was discussed on our latest webinar, hosted in partnership with Eigen Technologies, Hype or game-changer? What does AI really mean for the insurance industry?

Panellist Jennifer Hobbs, who is the Vice President and Lead Data Scientist for Zurich North America, said that while artificial intelligence (AI) has been around since the 1950s and 1960s, recent advancements have really accelerated the capabilities of this technology, which in turn has caught the public’s attention – and excited many in the insurance industry as well.

“Within natural language processing you have this new set of algorithms called large language models (LLMs), and these are really growing out of advances in the deep learning space,” she said. “The thing that is really exciting about these LLMs is firstly that they are large, which gives them huge capacity to store information, and therefore make broad predictions.

“But the other really unique things about this is that they're pre-trained, which means they don't need a lot of labels.”

And this pre-trained characteristic of LLMs means they have the ability to make an instant impact on an organisation, without the need for large volumes of development work.

“Whereas you used to have to train a model for machine translation, a model for entity extraction or a model for Q&A, out of the box these models are doing a really good job across a number of tasks,” Hobbs said. “And I think that's what's really leading to a lot of the excitement – the fact that they are able to handle unseen tasks, unseen prompts, and unseen pieces of data that they haven't been trained on.”

LLMs present a new type of risk

But this pre-training is also what makes LLMs a risky proposition, with Eigen Technologies Senior Vice President of Product Chris Mullan citing ChatGPT as one example of the risks presented by such models.

“It’s a bit like mansplaining-as-a-service, because it’s very confidently and diligently explaining something that it actually has no knowledge of, but it sounds very reputable,” he said. “And there’s a whole bunch of problems with the language, the data, and the bias.

“When it comes to the accuracy of ChatGPT, OpenAI makes absolutely no claims whatsoever.”

And Sridhar Manyem, Senior Director for Industry Research and Analytics at AM Best said that there is also an issue of explainability of LLMs when it comes to speaking to the regulator.

“LLMs create a different answer each time you ask them something, because they are trying to solve for some kind of goal, and they adjust the weights on different nodes, and they come up with different answers,” he said.

Manyem continued by describing a situation where an LLM model could come up with a different price for home insurance even for houses with similar or even identical characteristics, and he questioned how this might be received by a regulator.

“The predictability of the model in terms of whether it will give the same answer or not becomes an issue, and how are you going to explain to a regulator and say this is my model, and this is how it works?” he questioned. “That is going to be a very big issue, but I think this will be solved as regulators get educated on these kinds of technologies and we ourselves get more comfortable.”

But opportunities remain

Despite these risks, LLMs have been used by the insurance industry for a number of years, helping with tasks such as automating damage estimations or improving the customer experience.

But the uptake of open API-based solutions like ChatGPT has been much slower, largely due to the increased risks of bias and passing potentially sensitive data to the model provider, and Mullan said he hopes such API-based models are not being openly used by the industry, unless in separated and experimental sandbox scenarios.

Outside of this, however, the panel were very much in agreement that AI and LLMs present very real benefits and opportunities for the insurance industry, and it is certainly much more than mere hype.

Increasingly sophisticated chat bots that can take contextual information and more accurate damage estimates were just two of the examples where these models are already delivering real benefits to the insurance industry.

And Bill Keogh, operating partner for Eos Venture Partners, said that the possibilities for this technology only get greater as computational power continues to improve.

“The realm of the possible with technology and insurance today has grown exponentially over the last few years, particularly with ChatGPT,” he said. “And that’s building on years and years of Moore's Law coming into play, with computational power becoming cheaper, and just the incredible amount of capacity that we have.

“There's so many things that are happening right now, around the customer experience, improved financial performance of insurance companies, and improved risk management.”

But Keogh did have one warning amidst his optimism.

“I don't believe this is hype, I think this is very real,” he added. “And how it will manifest itself is through lots of experimentation, and the fact that ChatGPT is publicly available is a wonderful thing.”

“But with the computational power comes an obligation on the side of the user, which is, you have to have really good data to feed these models, whether it's large language models, or any other kind of model.”

How can AI help your underwriters today?

Eigen Technologies’ Underwriter Assistant solution uses AI effectively and safely to support underwriters with time-consuming document review and data gathering/analysis processes so they can focus on the risks and opportunities that matter most.

-

World Economic forum 2020

-

Gartner Cool Vendor 2020

-

AI 100 2021

-

Lazard T100

-

FT Intelligent Business 2019

-

FT Intelligent Business 2020

-

CogX Awards 2019

-

CogX Awards 2021

-

Ai BreakThrough Award 2022

-

CogX Awards Best AI Product in Insurance

-

FStech 2023 awards shortlisted

-

ISO27001

-

ISO22301

-

ISO27701

-

ISO27017

-

ISO27018